Hiya,

Happy May Day! It’s a public holiday in the UK, so I’m making the most of it with a hi, tech. special.

Let’s get going, starting with one of my favorite hi, tech. features:

hi, tech. Punches Upwards 🥊

I’ll ask again: What do people pay McKinsey for?

I’m still not sure we’ve cracked this one. The most common response is, “Well no-one ever got fired for hiring McKinsey haha”, which is a little unsatisfactory.

I ask the question again today because I saw this (annotations mine):

I asked ChatGPT to create the same content and if anything, these points make more sense:

It’s still folderol, but at least you know what you’re getting with the AI.

Speaking of:

🥰️ Will AI ever be empathetic?

I keep seeing that AI will handle the routine tasks, freeing us up to use our superpower, “empathy”.

This superpower is so formidable that we have been hiding it from sight for millennia. If we unleashed it, we’d never get the genie back in the bottle. Maybe now that AI is here, we can start showing an interest in other people.

I do enjoy this well-intentioned naïveté. If we were ever going to be empathetic, we’d have given it a go by now.

Nonetheless, a new study opens up a variety of interesting takes on this topic.

The study (here) is called:

Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum

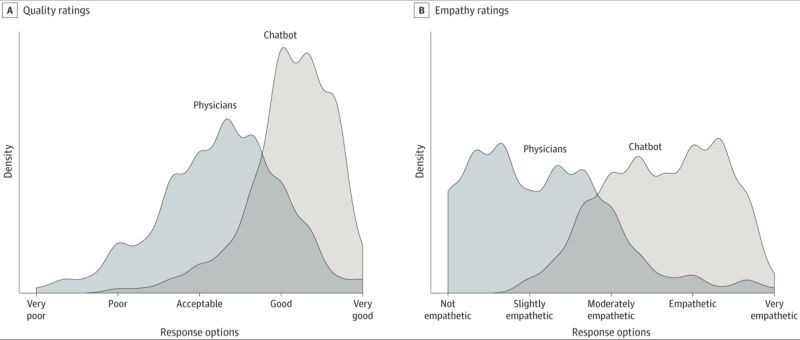

The study used 195 randomly drawn patient questions from a public social media forum, Reddit's r/AskDocs. A team of licensed health care professionals assessed the responses, comparing their quality and empathy.

So, there were already responses in Reddit to the questions and they got ChatGPT to answer the questions again. Then they scored the original, human responses against the new, ChatGPT ones.

The headline findings were:

AI chatbots demonstrated an improvement in recognizing users' emotions, with an accuracy rate of 73% compared to 65% with “real” physicians.

Patients reported a 20% increase in satisfaction levels when interacting with AI chatbots, as opposed to traditional customer support services.

AI chatbots were found to reduce response times by 45%, contributing to an overall better user experience for patients.

In terms of empathetic support, AI chatbots scored an average of 4.1 out of 5, which is a 15% increase from the human responses.

The report also highlights that 62% of patients found AI chatbots to be as empathetic or even more so than human medical professionals.

Patients with mental health issues, in particular, reported a 25% increase in perceived empathy when interacting with AI chatbots compared to human support.

And this is the chart that’s doing the rounds on social media:

Pretty striking, eh?

Before we get too excited: there are serious flaws in the methodology and they are clear enough that I need not spend a lot of time discussing them. For instance:

The “control” for the experiment is not ideal. They took random responses from Reddit, which may not be representative of the average patient-physician interaction. The responders on Reddit are qualified physicians (they explain in the paper how this is verified), but it’s still not exactly ideal.

These are one-off questions that do not take into account any long-term issues.

The sample population is also difficult to randomize properly. Instead, we get a cross-section of the types of people who ask questions on Reddit.

“Empathy” is a completely subjective assessment. They used their own scale, which is not externally verified. It comes down to “does this look empathetic?” and I’d suggest physicians on Reddit aim to address the questions factually, first and foremost.

I expect that future studies will build on these points. The authors do acknowledge some of these limitations in the paper.

However, I still believe that the study leads us to ask more questions about this concept of “empathy”. After all, it seems that it is increasingly popular:

AI is excellent at mimicking the behavior it needs to recreate to satisfy a human prompt. If it detects that the current prompt requires this so-called “empathy”, it’ll do you a good impression of empathy.

In most instances, that’s a darn sight more than you’ll get from your human brethren.

Of course, there are component parts to empathy:

Cognitive empathy: Putting yourself in another person’s shoes.

Emotional empathy: Sharing and responding to other people’s emotions.

Empathic concern: Caring for another person’s well-being.

Perhaps, all that a prompter requires is that sense that someone is listening. That validation could serve a purpose in some healthcare contexts, but it does not capture what empathy truly means.

I prefer the philosopher Cornel West’s definition:

"Empathy is not simply a matter of trying to imagine what others are going through but having the will to muster enough courage to do something about it."

Otherwise, we’d be as well creating a “thoughts and prayers” bot and leaving it to empathize on our behalf.

Impotent empathy, I’d call it.

The healthcare study did find that the AI responses were more comprehensive than the human ones, so I am not suggesting that AI cannot take action. Responding to queries is very much part of creating a solution. Still, it comes down to the people in charge of the technology to put it to greatest effect.

The AI can’t “feel” the patient’s emotions, but does that even matter? How would we know that a doctor was really feeling their emotions, anyway?

I may seem overly cynical, but my central belief here is actually that we undervalue empathy by simplifying it to “#BeKind” bromides. I don’t care that AI can mimic an empathetic response; I care that our expectations of an empathetic response are so low, even a robot can trick us.

In fact, our expectations are so low, any human can trick us by posting the right meme. The same people typically show little-to-no true empathy in everyday life. If they had any empathy for my feelings, they wouldn’t post the memes in the first place.

I see empathy as the key to complex problem-solving. I see it as a companion to curiosity and a catalyst for change. More than anything, I see it as a driving force for innovations that improve people’s lives. It is born of shared human experience, meaning that AI can only ever play the role on-stage without ever truly feeling it. That means AI can never tap into the true potential of empathy as a spark for action.

If we were truly empathetic, we’d have little reason to worry about AI’s progress. As it stands, the AI has probably surpassed us already.

Epilogue: Sure, anyone who knows me will know that taking empathy lessons from this guy would be like taking your childcare lessons from Herod. If I had any empathy, I wouldn’t put my readership through these lengthy newsletters.

Do as I say, not as I do. 🤓