💤 Dreamy AI: Surrealist Ties & Github Breakthroughs

+ /Trending, FB Computer Vision, ClarkGPT

I read a quote by a man called James Cameron the other day. I had to look him up to check but yes, he is the Titanic director. It seems he has been busy since then, although his totemic achievements have evaded the attention of this particular captain.

If you think that sounds like an implausibly high level of cultural ignorance, bear in mind that my own chatbot comes with these warnings:

The last album I remember being released is Craig David’s Born to Do It.

But anyways, Jam Cam had this to say:

“[Dreams] are kind of like a generative AI. I think they’re making imagery from a vast dataset that’s our entire experience in life, and … then another part of our brain is supplying a narrative that goes along with it and the narrative doesn’t always make much sense.”

It’s an intriguing notion, so let’s stick with our iceberg imagery for just a few more moments.

The surrealists placed huge significance on the meanings of dreams. They believed that society paid too little attention to these messages from the unconscious mind, just because their source was under the surface.

I do wonder what they would have thought of a human reservoir like the corpus of knowledge used to train ChatGPT. After all, its outputs rely on serendipity and its responses often defy conventional logic. Nonetheless, the source of these responses is our shared, collective experience. The dream parallel is not as wacky as it seems.

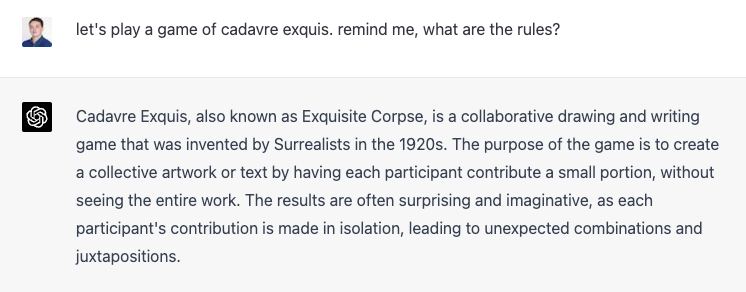

The surrealists had this game called cadavre exquis, where each participant would write or draw something and the others would build on this without seeing the full work.

We gave it a go and I’m sure you’ll agree, Dalí himself could never do better:

Mr Cameron, if you’re reading and you need a honey-voiced Ulsterman to play the lead, gimme a call. Or I can play a shipyard worker on Titanic II - whatever works for you.

/trending

A lot of people (no-one you know, but they’re definitely real) ask me how I keep my finger on the pulse. It’s not easy, although it does include going to https://github.com/trending every morning.

Head over there and you’ll see the most popular experiments and projects developers have released. Recently, it has become all the more revealing as we try to understand what’s next for AI.

This week, there has been an increase in the number of “Auto-GPT” projects. That is to say, they turn OpenAI’s API into an autonomous agent that can act on behalf of the user.

I’ve been working with this one today and it’s brilliant. I can program an AI assistant with just a few text prompts and off it goes:

I decided to create one that would keep up to date with AI trends and then create scenarios to test users on their knowledge.

Amazingly, it then decided to create its own agent called AITaskMaster. It briefs this new agent with the job of creating mini-challenges and then reviews the quality of the output.

This is the first challenge it came up with:

Challenge: Data Privacy and Security

You are a data scientist tasked with deploying an AI-powered recommendation engine for a client. The engine needs to use customer data, including demographic information, purchase history, and search behavior. However, the client is concerned about the privacy and security of their customers' data. You must ensure the secure transfer, storage, and use of sensitive data in the AI-enabled application. What steps will you take to address these concerns?

Solution: To overcome this challenge, you could take several steps to address the client's concerns about data privacy and security.

Here are some steps you could take:

1. Data Minimization: Minimize the amount of data used in the AI model to the minimum required for successful training and inference. Strictly adhere to only keeping data that is required.

2. Data Anonymization: Use anonymization techniques such as techniques to de-identify the data, discard any personally identifiable information such as names, social security numbers, addresses in the dataset so that it cannot be linked back to a customer's real identity.

3. Data Encryption: Encrypt the data at rest and in motion to protect against unauthorized access.

4. Data Access and Sharing Controls: Implement access controls, audit trails, and monitor user access to the data. Also, ensure data is only shared with authorized individuals or entities.

5. Data Monitoring and Governance: Regularly monitor and assess the system to ensure compliance with data privacy and security regulations and best practices. By taking these steps, you can ensure that sensitive customer data is protected throughout the AI system's life cycle, from data acquisition and training to inference and output.

A pretty good start, no?

Right this moment, my Sim-GPT agent is creating a script to turn this into an interactive exercise. I can set it up to complete this same task every day, using the latest industry news and creating a new scenario.

If it’s trending on Github, it’ll be big news for everyone in a few months’ time.

Facebook cracks object detection

Facebook has announced the Segment Anything model for computer vision.

Here’s what it is:

A segmentation model that can adapt to specific tasks without requiring task-specific modeling expertise, training compute, or custom data annotation.

By “segmentation”, they mean defining the boundaries between objects in an image.

This is a very laborious and challenging task for computer vision companies. The problem isn’t that they lack the technology to do it, but rather that they depend on structured and labelled data. That data is hard to come by, so they end up having to annotate images and then train the model.

The hope is that this will lead to “tighter coupling between understanding images at the pixel level and higher-level semantic understanding of visual content”.

In the demo, it makes everything look like it was painted by Patrick Caulfield:

Here’s why it matters:

Any notion of augmented reality relies on instant object detection. This model (which Facebook hopes will be foundational for lots of technologies) can generalise to new domains without training.

It could lead to developments like shoppable video, for example on entertainment platforms like Netflix.

I can also imagine it being used for wildlife conservation, as well as medical research.

Google apparently built the same thing 5 years ago (according to their engineers) and Google chose not to release it. (Detecting a theme with them yet?)

Here’s why Facebook is still rubbish:

They could make any example for how this would work within smart glasses, right? Literally anything - identifying martians or distinguishing cruffins from muffins. It’s a stylised, hypothetical example and no-one expects it to be realistic.

They went for this:

So the user looks at a dog bowl (on the right), and the billion-dollar AR tells them in a note beside the empty bowl that Rex was fed 20 minutes ago.

I’d throw the glasses out the window immediately. It’s almost sarcastic, isn’t it? I mean, I can see with my own eyes that the dog had its dinner.

Can you imagine wearing them all day? You just miss the bus - you can see it heading on down the road as you arrive at the bus stop. THE BUS DEPARTED 30 SECONDS AGO.

Trip over a log as you walk down the street. 5 seconds later: MIND YOU DON’T TRIP.

I get the feeling this creative genius was involved: