🕵️♂️ Generative AI Agents

🤯 A genuinely exciting, fun research paper

Good day.

I read quite a few academic papers, rarely by choice and scarcely for fun.

When I see one that is exciting and vibrant, I feel obliged to share it with you. Yes, this might be the first one I’m sharing with you.

In this study, Google and Stanford researchers set about creating “generative agents” and then letting them loose in a sandbox environment that looks like Pokemon Blue.

Below, I’ll summarise the key findings and answer:

What are generative agents?

What happened in the experiment?

How did it go?

Are there any ethical concerns? (Yes, obviously.)

How could these agents be applied outside of gaming?

"We introduce generative agents, computational entities that use large language models to simulate human behavior."

What are generative agents?

Generative agents are interactive computational entities that simulate human behaviour using large language models (think ChatGPT).

They are designed to store a comprehensive record of their experiences, reflect on these experiences to deepen their understanding, and retrieve relevant information to inform their actions. The generative agent architecture comprises a memory module, a retrieval module, and a reflection module.

What happened in the experiment?

The researchers added 25 generative agents as non-player characters (NPCs) in a Sims-style game world.

They simulated the lives of these agents in the game world for two days, focusing on four primary settings: the home, the office, the park, and the gym.

During the simulation, the generative agents interacted with each other, engaged in various activities, and exhibited diverse behaviours based on their individual experiences and the context of each interaction.

The generative agents stored their experiences in their memory modules, reflected on these experiences, and used them to inform their future actions.

To evaluate the agents' behaviour, the researchers conducted two evaluations: a self-report evaluation, and a Turing Test-like evaluation with human judges.

How did it go?

The agents performed very well in their evaluations. Some human judges remarked that they were much more believable than even the human conversations that were used as the control for the experiment.

The agents did take on something of a life of their own, too.

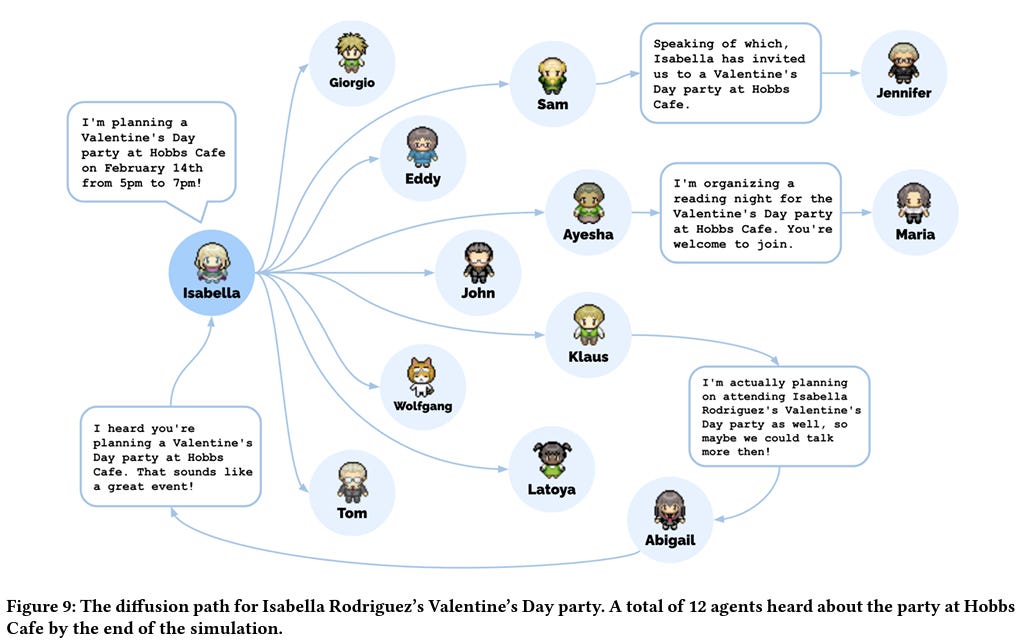

In the paper, the researchers mention an unexpected yet contextually appropriate interaction that emerged during the experiment. One of the generative agents organised a Valentine's Day party and invited other agents to attend. The agents engaged in conversations and activities related to Valentine's Day, such as discussing romantic relationships and giving gifts.

Within a day, half of Smallville had already heard about the party.

Poor Isabella is going to be devastated when she realises that party isn’t happening:

They are also prone to the odd hallucination, just like ChatGPT:

“Agents may also embellish their knowledge based on the world knowledge encoded in the language model used to generate their responses, as seen when Yuriko described her neighbor, Adam Smith, as a neighbor economist who authored Wealth of Nations, a book authored by an 18th-century economist of the same name.”

Are there any ethical concerns? (Yes, obviously.)

The paper raises several ethical concerns related to the deployment of generative agents, including:

a) The risk of users forming inappropriate relationships with the agents.

b) The potential impact of errors, which could lead to annoyance or harm.

c) The exacerbation of existing risks associated with generative AI, such as deepfakes, misinformation, and tailored persuasion.

d) The possibility of over-reliance on generative agents, displacing the role of humans and system stakeholders in the design process.

On point d, they are still bullish about the potential applications of generative agents for prototyping designs and simulating scenarios. They just don’t see them as a direct replacement for people.

"We envision generative agents populating virtual reality metaverses and physical spaces as social robots in the future."

How could these agents be applied outside of gaming?

Widely and with great success, I believe, although such optimism will hardly surprise anyone that’s been tracking my latest EdTech venture.

Still, I do see a number of potential applications for these pint-sized pixellated pixies.

Let’s just take a look at what they got up to in the experiment:

Daily routines: The agents developed daily routines, such as eating breakfast, exercising, and going to work. These routines showcased the agents' ability to simulate plausible human-like behaviour.

Conversations: The agents engaged in various conversations with each other, discussing topics ranging from personal experiences to sharing opinions on a range of subjects. This demonstrated the agents' capability to generate contextually relevant and diverse dialogue.

Hobbies and interests: Some agents developed hobbies and interests, such as cooking, painting, or playing musical instruments. These activities indicate that the generative agents can simulate individual preferences and pursuits, making them more believable as human-like characters.

Collaboration and conflict: The agents occasionally collaborated, such as working together on a project or helping each other with tasks. They also experienced conflicts and disagreements, reflecting the complexities of human social dynamics.

They could be put to any use where we need to simulate situations. For example, they could be at the heart of human-centered design, skills training, and the development of social systems and theories.

I felt I had to share it with you.

Perhaps you’re feeling similarly generous - share hi, tech. right here:

They should have included a fifth setting … the pub