🍲 Sim-mer Down: Why AI's Perfect Simulations Might Be Missing the Point

Let's all learn from Kriegsspiel

Hello,

I’m working today in the only way God intended:

You’ll likely know that my company builds simulations. If you didn’t know that, I thought it best to let you know. The below will make more sense, with that knowledge for context. We build them for marketing, for AI skills, and soon for many other things too.

I’m fascinated by simulations of all kinds. They’re the only video games I’ve ever really taken to. Simulator rides are the only “rollercoasters” I can bear.

There’s something deeper, psychologically, that makes me prefer these simulations to life, no doubt. However, I believe they also teach us things we can’t learn out there in the scary, “real” world.

For example, the things in the story you’re about to read.

🚀 What's News: From Roblox to Replicants

This month, we’ve seen a number of developments in the simulation world:

Roblox can now build entire 3D worlds with a flick of generative AI magic. No more meticulous designing—just tell the AI what you want, and it appears.

Minecraft has gone all AI, generating real-time environments that change instantly based on user input. Drop a block of dirt, turn around, and suddenly it’s a medieval castle.

Stanford and DeepMind eggheads have taken it even further: give them two hours, and they can create an AI replica of your personality. It’d probably take it two minutes to crack mine.

If we put these advances together, we can start to imagine simulations in which we can perfectly model both people and environments.

But beyond these flashy stories, what really caught my attention this month is a new book called “Playing With Reality: How Games Shape Our World” by Kelly Clancy.

It’s an excellent read that gets the coveted hi, tech. “Thumbs Up” 👍, and it contains some lessons we could do with heeding before we start building brand new, simulated worlds.

I particularly enjoyed the author’s point that while we love the notion that “AI will handle the drudgery, freeing us all up to be strategic geniuses”, the truth is that AI mastered chess a long time ago. It’s a pretty strategic game. Meanwhile, robots struggle to flip burgers or even walk like a human being, after billions of dollars of investment. Maybe we’ve got this all the wrong way round. They’ll do the strategy, we’ll serve up the fries.

However, we’re not quite ready to assume such pessimism here. The book also contains historical examples of how games have sharpened minds and led to real human progress.

One such example takes us back hundreds of years to a magical land called Prussia.

"Games are the closest representation of human life” - Leibniz

Kriegsspiel and AI: Learning Through the Fog

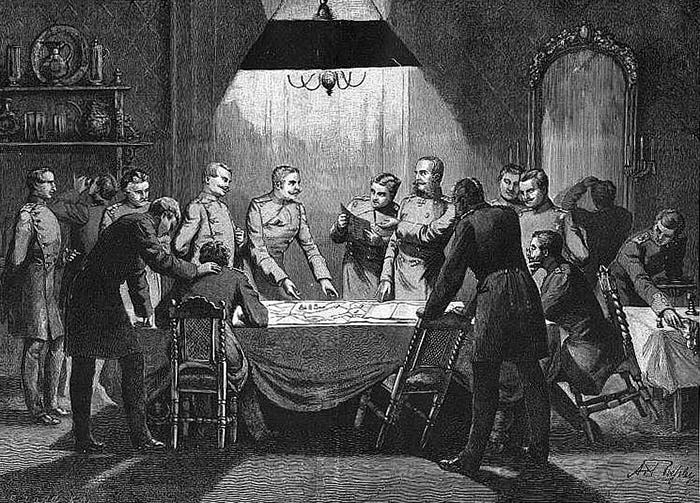

Back in the 1800s, a group of Prussian military officers gathered around a table to play Kriegsspiel—a simulation that was revolutionary for its time. The whole point of Kriegsspiel (it just means “war game” in German) wasn’t to recreate reality perfectly but to create an environment where players could think, adapt, and strategise under uncertainty.

Players gather round a table with a big map and sets of coloured blocks on the table top. Each team is given command of an imaginary army and they take turns to make decisions, moving across the map as they go.

In 1824, in a Berlin residence temporarily cleared of cats (so they wouldn’t knock the game pieces all over the place), officers didn’t just “play” Kriegsspiel—they debated strategies, questioned assumptions, and honed new ways of thinking. For weeks. Yes, a game could go on for weeks at a time.

The game’s influence grew slowly but profoundly, eventually attracting the attention of the Russian Grand Duke, who summoned its creator to St. Petersburg for a whole summer of training. Clearly, this was more than just a game—it was a tool to shape thinking. The game was still in common use through World War II and military teams use it to this day, although it is no longer their chief technological aid.

The genius of Kriegsspiel was its use of the "fog of war": players didn’t have all the information. The umpires filtered what players knew, reflecting the imperfect information of real battlefields.

These weren't technical limitations - they were conscious design choices.

Why? Because the game's architects understood something significant: sometimes, not having all the answers helps you find better questions.

The fog of war wasn't there to make the game harder - it was there to make the thinking clearer.

🧠 The Power of Purposeful Design

Fast forward to today's landscape. We’ll use marketing as our example.

Consider Google’s Performance Max campaigns. Google's AI promises to optimise everything - targeting, bidding, creative assets. But the marketers getting the best results aren't those who blindly trust the algorithm. They're the ones who use its limitations to develop crucial skills:

Strategic Diagnosis: Learning to spot patterns in the AI's successes and failures, developing frameworks for understanding when to trust it and when to override it

Resource Intelligence: Using the AI's targeting choices not as final answers but as prompts for deeper audience investigation

Risk Navigation: Building workflows that combine AI's efficiency with human judgment to gain a competitive advantage

At Novela, we're seeing this pattern across all our training simulations. Whether it's managing a crisis, allocating marketing budgets, or launching new products, the best learning happens not when we create “perfect” simulations, but when we design the right limitations.

We're not just developing new tools - we're developing new ways of thinking:

Instead of asking "What would the AI do?" we learn to ask "What is the AI missing?"

Instead of seeking perfect data, we get better at working with uncertainty

Instead of just automating decisions, we learn to make better ones

The Next Wave of Simulation

The future of simulation isn't just about better graphics or more accurate models. Here's what we're starting to see emerge:

Adaptive Learning Environments

Training scenarios that shift based on how you're handling uncertainty

AI that deliberately introduces specific types of ambiguity to develop particular skills

Systems that can identify and target gaps in strategic thinking

Simulations that adjust their complexity based on learner development

New Forms of Feedback

Instead of "right" or "wrong" decisions, analysis of decision-making processes

AI that can identify patterns in how people handle incomplete information

Systems that measure development of judgment over time

Tools that help learners understand their own cognitive biases

Collaborative Challenges

Multi-player simulations where different participants have different information

Scenarios that test both individual judgment and team coordination

AI-powered stakeholders that behave unpredictably but realistically

Training environments that mirror real-world communication challenges

Ethical Dimensions

Deliberate inclusion of ethical dilemmas in technical training

Scenarios that develop judgment about AI usage itself

Simulations that help organisations develop better AI governance

Training that builds awareness of AI's limitations alongside its capabilities

Final Thought

It’s true that we're living in an age of unprecedented simulation capabilities. We can model personalities, generate worlds, and optimize complex systems automatically. But the most powerful simulations won't be the ones that replicate reality perfectly - they'll be the ones that are designed to help us think better.

Yet there is a slightly false promise today that AI will illuminate any battlefield, which can lull us into dulling our senses.

Just as those Prussian officers learned warfare better through carefully designed limitations than they would have through perfect battlefield replicas, today's learners might find their best insights not in flawless AI simulations, but in the thoughtful gaps we leave for human judgment to fill.