Hello,

On the morning of his daughter’s wedding, Philip II of Macedon sat down for one of his regular sessions with his soothsayer. The soothsayer said that all would be well; Philip would have a joyous day that would enrich his already bountiful life. Phew.

As it happened, Philip was assassinated at the wedding by one of his bodyguards. Philip’s son, Alexander the Great (at that point, merely Alexander the OK - at best) ascended to the throne in his place. Was he part of the plot? We’ll never know.

And what of the soothsayer, you say? Oh, he was murdered too.

They took their soothsaying seriously back then. And no, he didn’t see that coming either.

On an unrelated note, here’s what Gartner reckons will happen in 2024:

I like the early incorporation of “2024 and beyond” (italics mine). They’re at the stage now where they’re only predicting what may someday occur, on this planet or any other, in this reality or an alternate version, once.

Prediction one starts with: “2026,”

Which had me searching for:

All I’m saying is, maybe we should start taking soothsaying seriously again. We need some standards round here.

What we’ve been up to at hi, tech.:

At times, a human version of this:

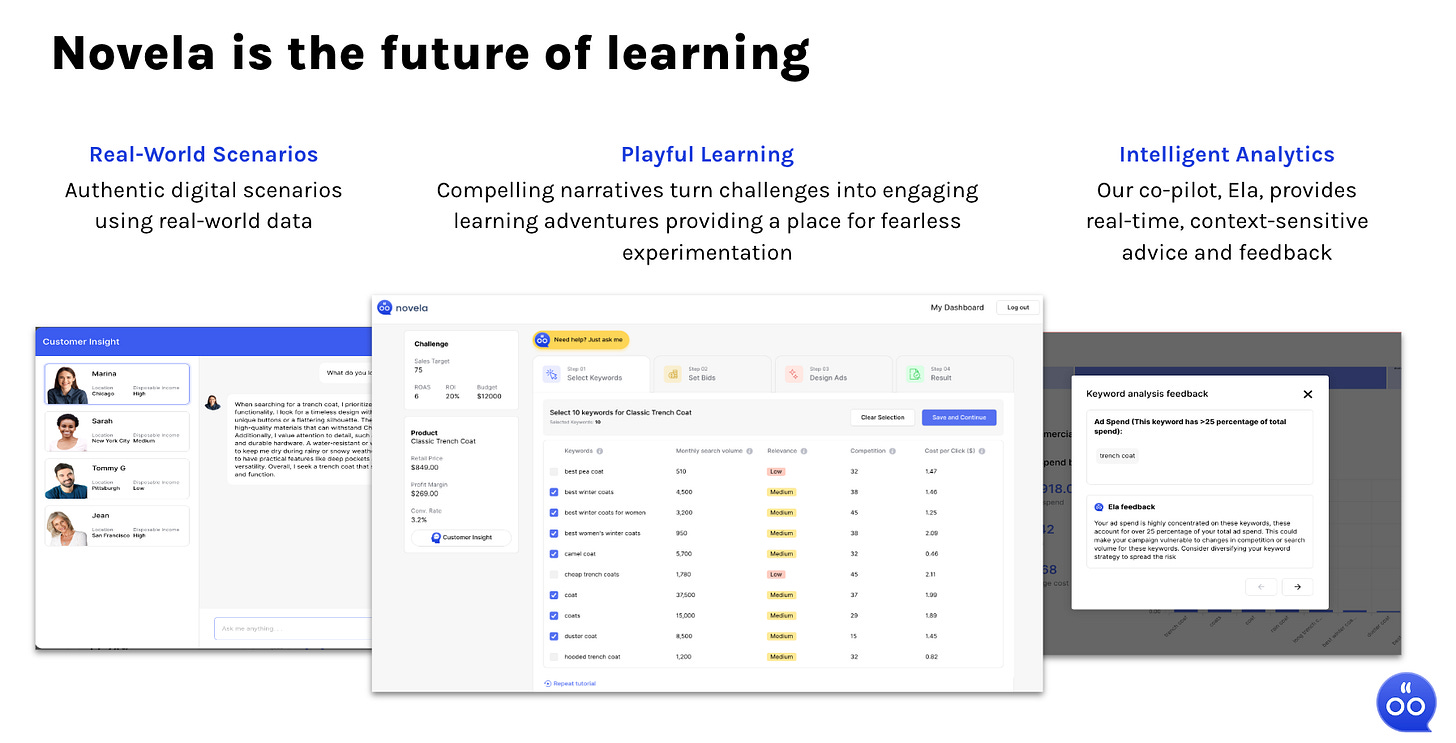

But also, working both manically and maniacally on Novela, the world’s greatest marketing simulations platform.

We’re live at Hult Business School, with Imperial College and Columbia Business School to follow within the next few weeks. The results look pretty good from the first few hundred users:

100% say Novela has helped them understand core marketing concepts

100% would recommend Novela to peers and for use in other classes

4.9/5 average score for the experience overall

And we’ve been jazzing up our pitch deck:

We hope to announce quite a few more partners very soon, and I expect to go full-time at the company in early 2024. So, that’s all exciting enough. But I’m here to share the excitement:

If your company, department, class, family, sports team, cult, or any other organisation needs to improve their marketing skills, let me know!

There’s a 10% discount for hi, tech. readers too - just tell them Clark sent you ;-)

🤖 Why do we want to believe that AI is “human”?

Well, we do this when:

We feel out of control

We need an explanation

By framing other intelligences as human, we bring them within our own Umwelt, as they say. In other words, we understand things we don’t understand by putting them into our own frame of experience. See also: Religion.

This is the same fallacy that leads us to oversimplify complex problems, thereby ensuring that we do not solve them. In our rush to simplify everything, we often forget that the world is just sometimes very complex indeed.

In the Middle Ages, people even held animals accountable for their “crimes”. A group of pigs was spared hanging only when the Duke of Burgundy intervened to pardon them. The pigs had eaten a lot of food from market stalls and the populace felt that these porcine predators must be punished. Luckily, the Duke knew better.

We laugh heartily now, but one can imagine people wanting to burn ChatGPT at the stake for stating a political opinion they don’t share.

Anyway, Google DeepMind has released Gemini, as you know. It is Google’s most capable AI model yet and it looks like it will be impressive. I’ve been trialling the available version today, but it doesn’t seem notably superior to last week’s Bard iteration.

Google will release the “Ultra” version in 2024, which it is pitching as a “GPT-4 killer”. The Pro model (available now) is for everyday use, similar to how people use GPT-3.5. Meanwhile, Nano will run locally on Pixel smartphones to help with on-device tasks.

Most commentators are focused on the competition between OpenAI and Google, but it’s also intriguing that Google has a specific new model for on-device actions. OpenAI has certainly discussed building a new piece of hardware, although one can imagine Apple entering this arena too.

Google has shared a few graphs comparing Gemini’s performance against OpenAI’s models in standardised tests. On the whole, Gemini does surpass GPT-4’s performance and that is a significant improvement for Google.

The demo videos make it look very impressive for multimodal search too. For example, Gemini can look at a short video of a starling murmuration and then code a simulation of their movement patterns. Again, this level of capability is certainly not available to jokers like me yet. I’ve been asking Gemini questions and I might as well be speaking to a Forbes article.

Overall, we can say we’re in the stage of “incremental improvements” with transformers and large language models. OpenAI has been rather quiet about GPT-5 and researchers are looking for new ways to deliver the next breakthrough moment.

It reminds me of when we were all talking about those smart speakers: Amazon Echo, Google Home, and the rest. The common notion was that once they could reach 95% accuracy for speech recognition, that would mark the threshold at which the technology was ready for everyday adoption and use. As it happened, they have been popular additions to many people’s homes, without becoming essential. Accuracy levels are close to 99% these days, too.

Generative AI has many more use cases and it is clearly very powerful, but small improvements in accuracy are not the difference between being a buzzy new tool and becoming an integral part of our lives. Or, for that matter, raising those infernal questions about whether AI is ready to take over the world.

For now, I’m enjoying the reactions of our modern-day Nostradamuses on LinkedIn who of course saw this all coming, but preferred to keep quiet for once.

And they want you to believe their predictions for 2024 and beyond, too. 🤯