🎢 Whee, LLMs Doing Puzzles, Is Micro-Targeting Really Effective?

Welcome back!

It’s been a quiet few months for hi, tech., if not for tech itself. Writing this newsletter requires a certain tiggerish joie de vivre that has been in short supply for this particular writer.

Sure, I could still have written a dispassionate list of things that happened every week, but you’ve got ChatGPT and the BBC for that.

That said, I’ve been keeping tabs on the whole industry and I’ve spotted a few curious papers and stories out there, some of which we’ll discuss below!

🤔 What else has been going on?

Well, I’ve been touring around to promote Novela’s AI-powered simulations. Yeah, AI-powered, like everything else these days.

But I think ours serves an actual purpose…

Novela is live at colleges like Imperial, Columbia, McGill, and loads more. Over the next few months, we’re going to start working with some enterprise partners too.

We’re raising an angel round right now actually, so reply to this email for more information! We’d love to have you on board!

🧑🍳️⚽️ Clark’s Classic Euro 2024 Cook-Off

I’m working my way through another charity cook-off. This time I’m raising money for a hedgehog charity and I’m cook-offing all the nations in Euro 2024. It’s looking like this so far:

You can follow along (and donate!) here: 👇👇👇

Now, it’s techin’ time.

TikTok launches an Instagram rival, Whee

TikTok has recently launched a new app called "Whee," aimed squarely at competing with Instagram. Except, not really. Rather, it’s trying to recreate what Instagram used to be, with a repeated focus on how photos are shared with “friends only”.

It has some unique(ish) features that TikTok hopes will set it apart. For example, Whee includes the ability to add headlines above photo captions, and its interface displays posts in a two-column grid similar to Pinterest. Wowee.

The app is being rolled out as a trial in select markets including Canada and Australia, marking TikTok’s continued expansion beyond short-form video into other types of social media content.

Yes, we’ve made it this far and I haven’t commented on the name yet. They say it’s because the app makes you feel like you’re on a rollercoaster, in which case it should be called “Get me off this thing right now I’m going to die”

And yes, it’ll lead to all manner of puerile jokes from less august publications. “Have you seen my Whee?”, they’ll say. “I just Wheed all over my phone”, those publications will josh. But we’re above that.

It sounds like a big story, but I’d wager it’s TikTok taking the Whee, if you will. They’re saying to Instagram: “You’re copying us and turning into TikTok, leaving some fertile ground for a friend-based photo-sharing app”, in the hope it spooks Instagram a little and sparks a retreat. It doesn’t cost much for TikTok to spin up an app like this and get us all talking, after all.

And hey, if people do actually love to Whee, they can make it a real thing.

It reminds me of that classic story of BMW “launching” a hatchback at a Munich car show in the early 90s. They made a big song and dance about launching this car and targeting a new market segment. However, they never really planned to build more than the prototype. They wanted one of their rivals to take the bait, go build a hatchback of their own, and test the market on BMW’s behalf. Mercedes fell for it and the A-Class was born. BMW sat back and watched, then launched the Mini.

Testing LLMs with Rare Languages: Insights from A New Study

In the world of artificial intelligence, understanding how well machines can process and reason through complex language tasks is pretty important. The recent study, "LINGOLY: A Benchmark of Olympiad-Level Linguistic Reasoning Puzzles in Low-Resource and Extinct Languages," dives into this challenge headfirst.

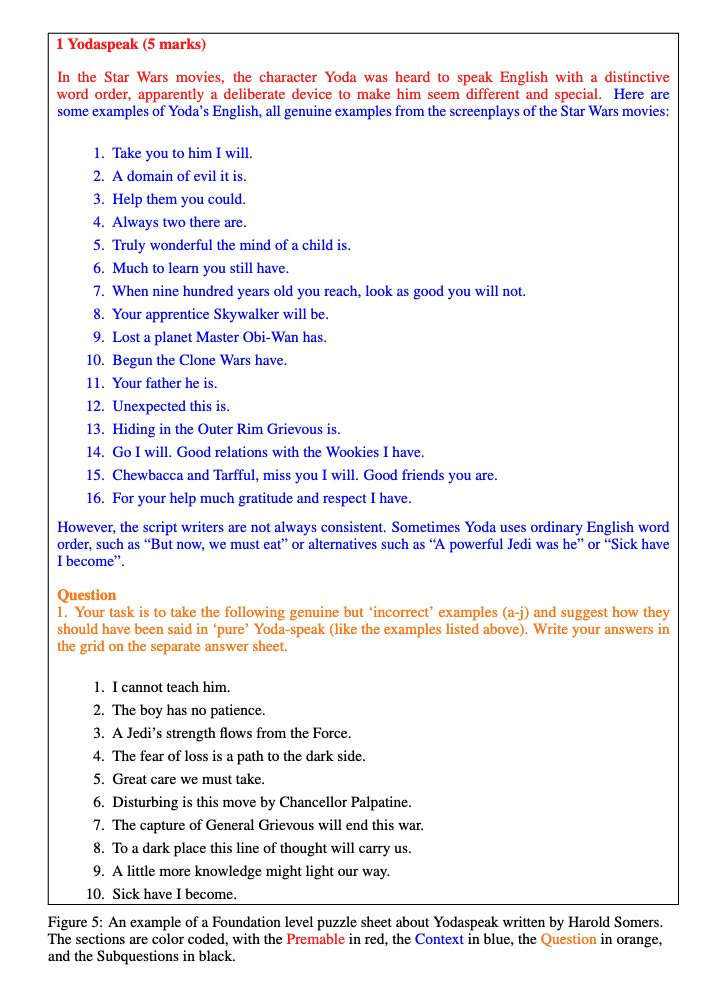

The LINGOLY benchmark is designed to evaluate advanced reasoning capabilities in large language models (LLMs) using challenging linguistic puzzles derived from the United Kingdom Linguistics Olympiad (UKLO).

Why It Matters

This study is significant because it pushes the boundaries of what AI can do with languages that are not widely spoken or well-documented. By focusing on low-resource and extinct languages, the LINGOLY benchmark aims to ensure that these AI models are truly understanding and reasoning through the tasks, rather than just relying on memorized data. The results show that even the most advanced models struggle with these complex problems, highlighting areas where further development is needed.

Key Points:

Benchmark Composition:

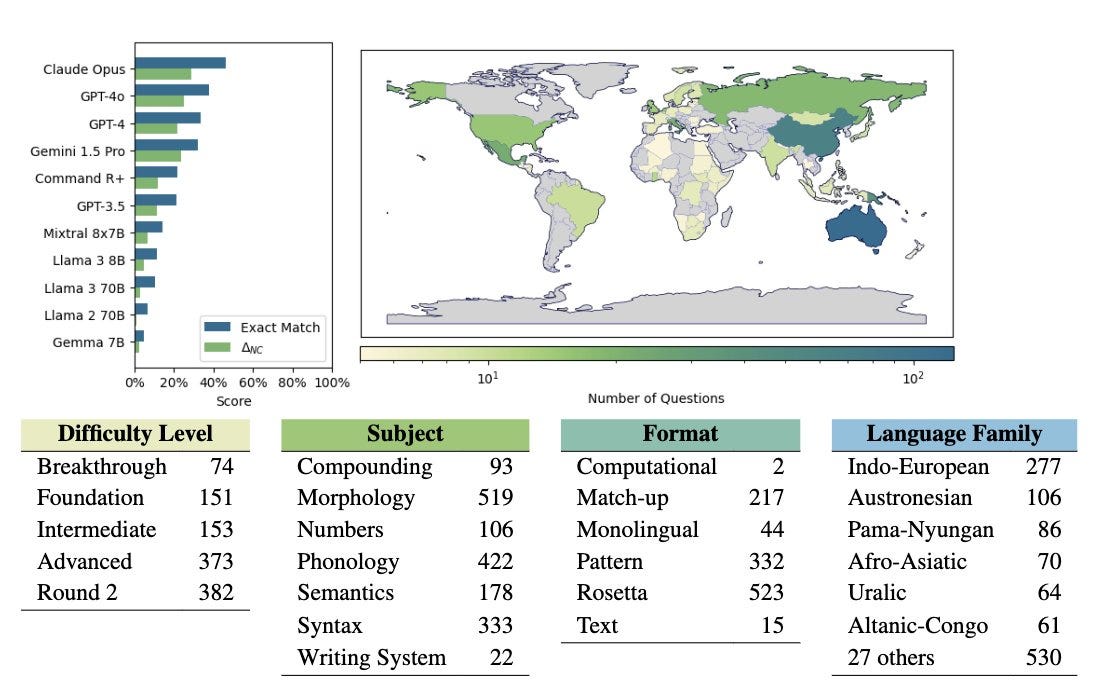

Contains 1,133 language problems across 6 formats and 5 difficulty levels.

Covers over 90 languages, focusing mainly on low-resource and extinct languages.

Problems are designed to be solved using provided context without requiring prior knowledge of the language.

For example, check out this Yoda-based one:

Results:

State-of-the-art LLMs perform poorly on higher difficulty problems, with the best model achieving only 38.7% accuracy.

Closed models generally outperform open models.

Higher resource languages tend to yield better scores, indicating challenges in true multi-step out-of-domain reasoning.

Challenges Highlighted:

Multi-step reasoning remains difficult for current models.

Performance on easier tasks suggests some reliance on memorization rather than genuine reasoning.

The benchmark provides a robust assessment by focusing on low-resource settings to ensure the tasks are novel to the models.

New Research on AI and Political Microtargeting: Surprising Findings

A recent study published in PNAS by Kobi Hackenburg and Helen Margetts explores the effectiveness of political microtargeting using large language models (LLMs) like GPT-4.

I found this fascinating because well, we’re in an election year in the UK, US, and France. We also hear about how generative AI could be used to manipulate voters by creating unique content that is tailored to each individual’s prejudices. This is dangerous for obvious reasons, but also because it would be so challenging to police.

This study find that the current LLMs are in fact not as effective as one might expect when it comes to “microtargeting”. The research is also relevant for marketers who prioritize personalization over big, impactful ideas.

📊 Key Points:

The study involved 8,600 participants, generating thousands of unique messages tailored to individual demographics.

Despite the tailored approach, microtargeted messages were not statistically more persuasive than generic ones.

Both microtargeted and non-microtargeted messages had a broad persuasive impact, challenging the hype around microtargeting's superior effectiveness.

🧠 Implications for Advertising:

Similar trends have been observed in marketing research. For instance, in retargeting campaigns, brand-led messages can often outperform personalized ones.

Quality over specificity: This suggests that the overall quality and general appeal of a message is more critical than its specificity.

In other words, the study does reveal some limitations of GPT-4. However, it also reveals something about how people are persuaded to take action. Our assumption is that “microtargeting” will work, but in the process we forget about just how interconnected people are. We sometime like the security of taking actions we know others will take, and big generic messages provide some of that comfort.

Until next time!